Behind the Scenes of Nexxen’s Kubernetes Deployments: Crafting Scalable Systems

By: Orel Lazri and Aviad Haham

At Nexxen, we faced the challenge of designing a scalable and robust system capable of managing our rapidly growing number of deployments on a large scale. This was crucial to ensure that our infrastructure could handle the increasing demands and complexities of our operations. To address this, we focused on building a deployment pipeline that is both seamless and powerful.

One use case within our engineering teams involved deploying a new microservices-based data pipeline system. This system requires the ability to process vast amounts of data in real time, be highly available during peak processing hours, and integrate seamlessly with our existing services. Our Kubernetes deployment strategy played a pivotal role in meeting these requirements, allowing us to efficiently manage the lifecycle of these microservices and leverage GitOps practices for consistent deployments, and quickly accommodate scaling demands with new environments and clusters.

Let’s dive into the key components that make our deployment pipeline efficient, reliable, and capable of supporting our expansive and dynamic environment. Each element plays a vital role in ensuring that our deployments are smooth and our systems remain resilient. By examining these components, we can better understand how they work together to create a streamlined process that meets our high standards for performance and scalability.

Each element plays a vital role in ensuring that our deployments are smooth and our systems remain resilient.

Each element plays a vital role in ensuring that our deployments are smooth and our systems remain resilient.

GitOps: Argo CD ApplicationSets & Self-Management

At the core of our scalable deployment strategy is GitOps, a paradigm that uses Git repositories as the single source of truth for both our infrastructure and application configurations. We implement this approach with Argo CD, a declarative GitOps continuous delivery tool specifically designed for Kubernetes. By utilizing Argo CD ApplicationSets, we can effortlessly manage multiple applications, environments (both production and non-production) and clusters. This self-management capability ensures that our deployments are not only consistent and reproducible, but also scalable to meet our growing needs.

Furthermore, our deployment approach is quite flexible, leveraging the flexibility of Argo CD to support deployments using Kustomize, Helm, and native Kubernetes manifests. This generic approach allows us to standardize our deployment process while maintaining the ability to use the most appropriate tool for each specific scenario. By integrating these tools, we can ensure that our deployment pipeline remains efficient and adaptable, regardless of the complexity or requirements of the applications we are deploying.

Infrastructure as Code: Empowering Rapid Deployment

With Infrastructure as Code, we can bootstrap our entire infrastructure services in a matter of minutes. This includes provisioning AWS resources, setting up VPCs, configuring EKS clusters, and installing essential tools like Argo CD. The ability to automate these tasks reduces manual intervention and human error, ensuring that every deployment is identical and adheres to our security and performance standards. The speed and efficiency gained through this approach means the foundational infrastructure ready almost instantaneously.

Our Infrastructure as Code is implemented using Git and Terraform, leveraging the powerful benefits of version control systems. By using Git, we ensure that all our infrastructure code is tracked, versioned, and collaboratively managed. This brings several benefits, including change tracking, collaboration and consistency.

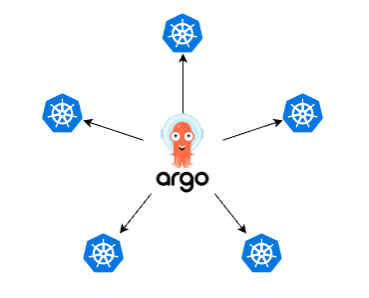

Hub and Spoke Architecture

The hub-and-spoke architecture is a model that centralizes control and management while distributing deployments to various environments. In our setup:

- Hub: The central management cluster where Argo CD is installed. This hub is responsible for managing multiple Kubernetes clusters (spokes). Our Argo CD is deployed to an AWS EKS cluster using Terraform.

- Spoke: Individual clusters representing different environments.

This architecture provides several advantages:

- Centralized Management: Simplifies the management of multiple clusters.

- Scalability: Easily adds new clusters without changing the core setup.

- Isolation: Maintains separation between environments, enhancing security and stability.

By leveraging a hub-and-spoke architecture, we have centralized the management of our Kubernetes clusters while maintaining the flexibility and isolation of individual environments.

Read Next